Google Panda 4, and blocking your CSS & JS

A month ago Google introduced its Panda 4.0 update. Over the last few weeks we’ve been able to “fix” a couple of sites that got hit in it. These sites both lost more than 50% of their search traffic in that update. When they returned, their previous position in the search results came back. Sounds too good to be true, right? Read on. It was actually very easy.

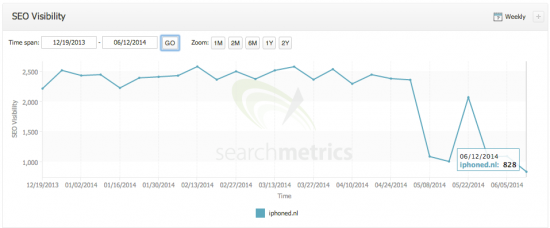

Last week Peter – an old industry friend who runs a company called BigSpark – came by the Yoast office. BigSpark owns a website called iPhoned.nl and they’d been hit by the every so friendly Google Panda. Now iPhoned.nl has been investing in high quality content about (you guessed it) iPhones for a few years now, and in the last year they’ve stepped it up a notch. They are pushing out lots of news every day with a high focus on quality and their site looks great. Which is why I was surprised by them being hit. You just don’t want your Searchmetrics graph to look like this:

Notice the initial dip, then the return and the second dip, leaving them at 1/3rd of the SEO visibility they were “used to”. I dove into their Google Webmaster Tools and other data to see what I could find.

Fetch as Google’s relation to Google Panda

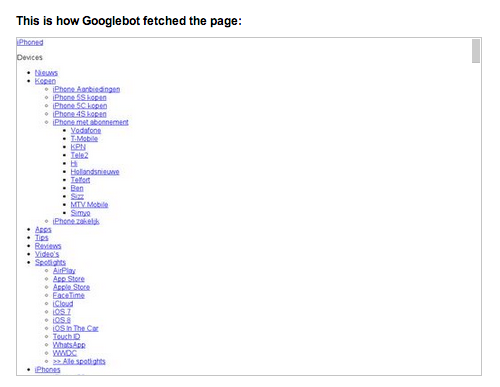

In Google Webmaster Tools, Google recently introduced a new feature on the fetch as Google page: fetch and render. Coincidence? I think not. They introduced this a week after they rolled out Google Panda. This is what it showed when we asked it to fetch and render iPhoned’s iPhone 6 page:

Even for fans of minimalism, this is too much.

Now, iPhoned makes money from ads. It doesn’t have a ridiculous amount of them, but because it uses an ad network a fair amount of scripts and pixels get loaded. My hypothesis was: if Google is unable to render the CSS and JS, it can’t determine where the ads on your page are. In iPhoned’s case, it couldn’t render the CSS and JS because they were accidentally blocked in their robots.txt after a server migration.

Google runs so called page layout algorithms to determine how many ads you have. It particularly checks how many ads you have above the fold. If you have too many, that’s not a good thing and it can seriously hurt your rankings.

In the past blocking your CSS was touted by others as an “easy” way of getting away from issues like this, rather than solving the actual issue. Which is why I immediately connected the dots: fetch and render and a Google Panda update? Coincidences like that just don’t happen. So I asked Peter whether we could remove the block, which we did on the spot. I was once again thankful for the robots.txt editor I built into our Yoast SEO plugin.

Remarkable resurrection

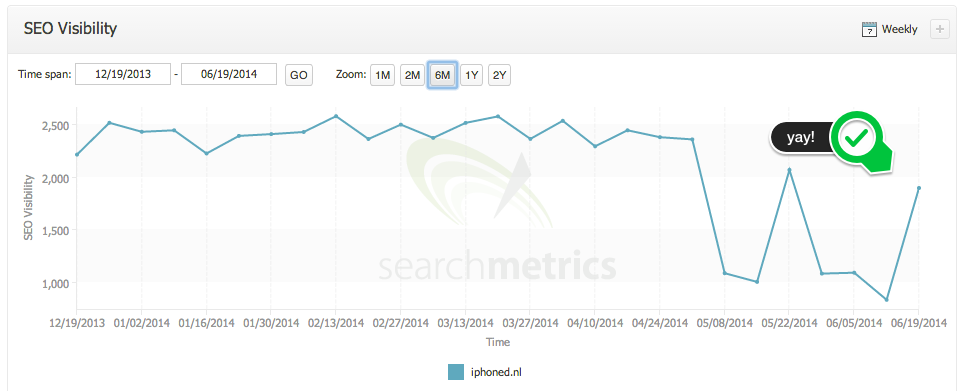

The result was surprising, more so even because of the speed with which it worked. It’s now a week ago that we changed that block and their Searchmetrics graph looks like this:

They’ve returned on almost all of their important keywords. Just by unblocking Google from spidering their CSS and JS.

When we saw this we went and looked at some of our recent website review clients and we found the exact same pattern. One of them turned out to have the same problem and already looks to be returning too.

Confirmation from Google: don’t block your CSS & JS

Now I don’t usually post my “SEO theories” on the web, mostly because I think that’s more hurtful than helpful in many, many cases as they’re just theories. So I didn’t want to write this up without confirmation from Google that this was really the cause of the issue here. But then I read this live blog from last weeks SMX, and more specifically, this quote from Maile Ohye embedded in it:

“We recommend making sure Googlebot can access any embedded resource that meaningfully contributes to your site’s visible content or its layout”

That basically confirms our theory, which had already been proven in practice too, so I went ahead and wrote this post. Would love to hear if you’ve seen similar issues with the Google Panda 4 update, or (even better) if in a week from now you’re ranking again because you read this and acted!

Read more: robots.txt: the ultimate guide »

Thank you for this great information. I have checked my sites, and nearly all had restrictions on the mentioned files. Took me some time to identify the issues in WMT and correcting all the robots.txt-files.

Great post on maybe unnoticed so bad Panda update. Google has been going fierce with ATF and BTF strategy for the contents vs advts for quite sometimes now. It’s time that all serious users rethink their content/script loading tactics now.

Thank you for this post. I also noticed a strange drop in traffic on their projects. Now try to open access to the robot CSS and JS.

Thanks so much for yet another useful article Joost! Ive went off and fetched & rendered a few pages, and similar to the posters above, Im finding that external resources, such as google fonts, are being denied. I’m presuming that there is no way to stop this? In saying that, otherwise the pages are rendering nicely, so this Panda update blog article actually put a smile on my face, sooo far :) All the best from Dublin

I believe this is just a coincidence, if your site is already a mess from a panda 4.0 standpoint changing this won’t fix your traffic drop, you have to work on ALL the panda variables to expect a result.

Thanks Joost for publishing it, It’s very informative. I am going to test all of my websites.

That was a fantastic come back Joost and thanks for putting this up ;)

Like many of you In Google WMT when I run a search & render Google can only partially read my site. Most of the errors include the inability for the bots to read Java/CSS or a plugin.

After troubleshooting the issue I tried deleting my disallow robot.txt settings which fixed several of the errors but not all. Additionally I tried adding an include all command “user agent: * allow /” which did not work.

Any advice?

THANK YOU for this post! My main money site took a major hit in the new Panda update. As soon as I found this article, I immediately logged into Google Webmasters and checked Fetch as Google. Sure enough, I learned that robots.txt was blocking scripts. It’s too soon to tell whether fixing it solved the problem, but I’m at least grateful that you helped me find an issue I was unaware of. Thanks again for mentioning this.

Wow, thanks for putting this forward Joost, I would never thought CSS could affect SEO so drastically.

Another Google update that I thankfully didn’t get hit by! Hope everyone gets their rankings back!

Hi Guys,

I’d like to unblock these but my webmaster is worried that we’ll start filling up the index with the CSS files if we unblock them. Can any of you offer any reassurances that this won’t happen?

I’m thinking that

a) I’m guessing Google has a way of avoiding indexing the CSS if they are going ahead and telling everyone to unblock them

b) Also even if some got indexed it’s not the end of the world. None will rank anywhere to mess with user engagement metrics in the SERPS. Also we can always remove them from the SERPs through GWT.

Any reassurances very welcome

thanks!

Peter K

What is good way to test if any of the JS or the CSS files are being blocked? Thanks for the awesome test and news.

Hi Yoast and thanks for the heads up.

After reading your article I decided to remove the blocking of wp-includes from robots.txt, and as a result I can immediately see fetch and render in webmaster tools rendering my mobile site in a more accurate way. Too early to tell if it will influence my rankings but I really hope it will.

Will touch base with your article in a few days or sooner if rankings start to improve before that.

Nice post Joost… how it mean if you use on your site for example Google Font?

The tool reports that: “Style Sheet – Denied by robots.txt”

and it’s true!

http://fonts.googleapis.com/robots.txt

mmm…

tnx alot for sharing case.

I lost %30 of my traffic after panda 4.0. ! I’m still trying to understand the reasons of this traffic drop. I realised that my robots file is also blocking css files. let’s see what happens.thank you

Here’s some advice. Don’t register domains with registered trademarks in them. Especially if it’s an Apple trademark. They will likely get sued for the domain and lose it, Google traffic will be the least of their worries. Just thought I’d point that out.

You mentioned what the issue is, but how do you go about fixing? I am having the same issue, how can we make JS and CSS so that it is not denied by robot.txt???

Goto WMT and under fetch, click fetch and render. If the robots.txt is blocking css you’ll know. It’ll look like plain text. For Javascript, look at the details below the render. Wmt will list everything being blocked.

These are the errors im getting when i do a fetch and render, what would i need to change in my .robot.txt?

http://fonts.googleapis.com/css?family=Open+Sans Style Sheet Denied by robots.txt

http://pagead2.googlesyndication.com/pagead/js/adsbygoogle.js Script Denied by robots.txt

http://www.stayonbeat.com/wp-includes/js/jquery/ui/jquery.ui.widget.min.js?ver=1.10.4 Script Denied by robots.txt

http://www.stayonbeat.com/wp-includes/js/jquery/ui/jquery.ui.core.min.js?ver=1.10.4 Script Denied by robots.txt

Hey Bill,

Unfortunately you won’t be able to unblock fonts or adsense as these are being blocked by Google’s Robots.txt file, but that’s okay because Google is the one doing it…Right? :)

As for the other two js files, you can either do one of two things. You can either change Disallow: /wp-includes to Allow: /wp-includes in your robots.txt or for finer control, allow just the those two js files.

Allow: /wp-includes/js/jquery/ui/jquery.ui.widget.min.js

Allow: /wp-includes/js/jquery/ui/jquery.ui.core.min.js

After, fetch and render your page again both in Desktop and Smartphone views and check the result.

Thanks eric for the advice :)

That explains how to find the problem, not how to fix it.

Exactly, and it seems that the virtual robots.txt file of WordPress SEO by default blocks the lot, so it would be nice to know how to fix the issue (if indeed it is a real issue to begin with…).

I think what we need is more information from Piet and Michael. Traditionally, to allow Google to see your js and css, you simply wouldn’t ‘Disallow’ the folder path in the robots.txt.

Can you both provide an example of CSS or JS that you are seeing blocked? While looking at WPTI, the robots.txt is blocking wp-includes. While this is the default setting, this will block core js from Google. So you need to allow the explicit file. For example:

Disallow: /wp-content/plugins/

Disallow: /wp-content/mu-plugins/

Disallow: /wp-admin/

Disallow: /wp-includes/

Disallow: /out/

Disallow: /embed.js?*

Allow: /wp-includes/js/jquery/jquery.js

Allow: /wp-content/plugins/w3-total-cache/

WOW… I’m testing this right now! I’ll publish my experiments very soon.

Thanks for advice!

Thanks a lot yoast

this is really coincidence because in past 24 hours i searched everything about this css and javascript blockage

now it confirmed that i need to edit

but a question what about plugins css and javascript?

is it needed to add css and javascript of plugins to allowed section?

plugins like yarpp related post in widgets use their own css? so what about them?

Great article, Need to check my website now. Thanks again

How can we let only Google’s bot to access all the assets of WordPress and block other bots ?

Thank Joost for this post! My site also lost 50% of organic traffic after panda 4.0 :( . Let me try this!

Until now for most of my sites the CSS and JS were blocked by Robots now not but I doubt if the recovery is directly related to Panda updates.

Im having the same issue, can anyone please explain how have robot.txt not block my js and css?

I am wary of this one, sometimes these things fluctuate and you cant tell for sure for a couple of weeks. Definitely weird though!

Interesting one. Seems a touch heavy handed if all other signals are positive for the site. Still, having tweaked lots of sites that were effected by aggressive advert placement this is something else to add to the toolbox. Thanks for sharing. :)

I checked your robots.txt and still you are blocking the whole plugins folder. Can you please tell me what is the reason behind it. I am but confused what to do about it with my case.

Thanks in advance

Matts Cutts on SMX (dd june 19th 2014) gives a bit of additional info and explanation on the fetch by Google, css blocking issue.. check the videointerview on 16 min 35 sec: https://www.youtube.com/watch?v=qglAm8QiX5k#t=1049

Can you share about Panda 4.0 update?

Google is making webmaster perplexed by introducing the new methodologies in their crawling mechanisms. In any case we have to be aware of robots to avoid rankings drift..

Panda 4.0 made my blog disappear from search engine, do you know how to set the robots.txt for my blog?

Is panda update 4 is all about making websites lose ranking which are faking ads with css, js?

they always keep changing techniques and making me confused though sometimes, like i’ve tested to “fetch and render” on my website, but it say access denied for google fonts URL: http://fonts.googleapis.com, so what can i do about this?

I can imagine if people are faking things and use js and css for their ads google would punish them. What about the people who use it the right way. Want to see more analytics. Just my point of view.

We never block CSS and JS – got cut down a third from the update – I did the fetch and render, turned out the only things blocked by robots.txt are on other people’s servers: Google Fonts and the incredibly popular ad network we use, their servers block the js. So, if that mattered, then *everyone* who uses popular ad networks would drop. So, little (lot) confused about what the answers really are now. We’re not a site in a spammy category, but seems like for us it will turn out to be something else unless Google really is penalizing external js blocking.

I would doubt you are penalized for blocking external js. We currently have adsense running and while rendering, the ads are not displayed. Looking at google ‘ s robot.txt, they block their ads. Most top ad networks do this as well. So I would believe that if it is a js ad, then Google should be able to identify it and you will be okay.

I’ve totally been blocking my theme and plugins folder since forever. Thank you so much for sharing this!

Could it perhaps be that the WordPress SEO plugin is the culprit? I just ran my site through Fetch & Render and also get the mistakes that certain stuff in not being picked up by the robot. It says it’s due to the robots.txt file, but as far as I know I only have the virtual robots.txt file generated by the plugin.

Great article, Need to check my Google’s webmaster page.

Since this update is your post: “How to cloak your affiliate links” not a viable solution anymore for ad network javascript ads?

Very interesting hypothesis. However, it seems a little premature to draw conclusions.

If you are not affected by panda it is better not change your settings, wait and see ;)

I think this is not a case of Panda 4, this is a case of normal google crawling. but do you have any idea how to regain our position when some fake websites comes and grab search engine ranking. Second point , what is the effect of sitemaps when a user frequently change his/her sitemap.

Ditto to some of the comments above – even with just blocking /wp-content/plugins/ I am still getting partial render as a lot of items within are blocked. Also getting Google Ads being blocked. Is there new suggested robots.txt code?

When I use the fetch function in webmaster tools, there are a handful of links that the google spider wants access to, but these are offsite.

For example, a google api script for fonts:

http://fonts.googleapis.com/css?family=Lato%3A300%2C400%7CMerriweather%3A400%2C300&ver=2.0.0

And a google share button:

https://apis.google.com/_/ 1/sharebutton?plusShare=true&usegapi=1&action=share&annotation=bubble&origin=http%3A%2F%2Fwww.shadowofiris.com&url=http%3A%2F%2Fwww.example.com%2F&gsrc=3p&ic=1&jsh=m%3B%2F_%2Fscs%2Fapps-static%2F_%2Fjs%2Fk%3Doz.gapi.en_US.cYW_kRj2Awc.O%2Fm%3D__features__%2Fam%3DAQ%2Frt%3Dj%2Fd%3D1%2Fz%3Dzcms%2Frs%3DAItRSTOLzAf574e343dsj-b3zXcmw-hYXA

There’s another one for a twitter image at twitter, one for reddit image at reddit, a couple more for google content …

Can I safely ignore stuff like this? I have not way to grant access to the robot.txt file.

Thank you for any answers.

same problem for me too :(

I have the exact same question. Anyone?

Very nice post, this should help a lot of people trying to figure out why their rankings drop and how to get them back.

Thanks!

Great post Joost. I’m suffering from Google’s Panda as well at the moment. A colleague of mine, responsible for SEO, showed me the new feature ‘Fetch and render’ in Google’s Webmaster Tools but we didn’t make the connection with Google Panda. I changed my robots.txt with your suggestions (https://yoast.com/example-robots-txt-wordpress/ – allowing everything except /wp-content/plugins/), which resulted in perfect rendered pages.

One thing is still not clear to me though. You talked about how advertisement and its placement could have consequences for page ranking. Although changing my robots.txt with your suggestions, adsbygoogle.js and a Google font are still being denied by robots.txt. Any thoughts on that would be welcome.

Two months ago i remove on mine WordPress all disallow rules. Suddenly Google Bot start crawling lot of pages with 404 errors. The pages that didn’t exist and i think that this is caused from their JavaScript parser.

To become more complicated errors are get only from smartphone bot. Desktop bot doesn’t see them.

Fortunately, this update is not bothering me too, thanks for the article Mr. Yoast.

Very helpful information!

I noticed a serious drop in traffic about a month ago and couldn’t account for it, which was especially frustrating after implementing several seo updates to my site. I just ran my site through “fetch and render” and saw that some of my scripts and css were denied by robots.txt. Additionally, I have an ad above the fold that I am afraid might be affecting my rankings.

Is there anyone out there who has seen improvements after removing an above the fold ad?

Thanks for sharing this nice information. I think that many blogs & website are caught by this update which affect the blogs…

I recently noticed google has updated their recommendations for the site migration process (when moving to new url) to include not just redirects of Page urls but also embedded resources like js and css. https://support.google.com/webmasters/answer/6033086?hl=en&ref_topic=6033084 (maybe I was a bit slow but I wasn’t aware that you had to redirect those files)

It make sense however it is clearly an early conclusion. As we see also in the graph, the first dive was not during the Panda 4.0 release. As I remember during those days mozcast temperature was so high and possibly the release of spammy queries involving Payday Loans 2.0.

However your point is good and I’m thinking to check those aspects on some of my sites.

Thanks Joost , i found that i had blocked out all CSS and JS on all of my sites , i noticed that drop as well recently and hope to get back to previous levels. Really easy fix this is.

For the life of me I still don’t know how to correct this. any pointers

Thanks for the note Yoast, I am interested in hearing about your theories any day of the week even if they involved big foot riding a ufo.

Quick question, what does this mean for the robots.txt of my WP installation ? Should I get rid of the “Disallow: /wp-content/themes/” ?

I know you wrote an article about this back in 2012 but I also noticed your robots.txt looks significantly different today. Any chance of an update?

Cheers!

Great post and it definitely makes sense.

Hi Joost,

On one site of mine I lost about about 100% of my clicks through Google. Yep, that’s a lot. I know Google doesn’t like my kind of websites (business listing for a local village/municipality) but losing 100% after May 21st was beyond my scariest nightmares.

Most of my ads are in the left sidebar, which is loaded in my theme before the main content (above the fold?). I’ll get busy with this article in mind and let you know the results -if any- in a week or two.

oh man google seems harrsh to penalize a site for this

there have to be a way so that google will show the reason of getting penalized

I just did a Fetch & Render test as described in the article and it returned a partial result. The reason as described in the result was that the stylesheet for Google Web Fonts could not be loaded by the renderer because it was blocked by robots.txt. Google Fonts robots.txt indeed blocks all user-agents. So Google doesn’t follow their own rule. I wonder if this actually hurts users or they have an internal exception for their own services.

Yes, I have seen the same issue on 3 of my sites where google fonts are shown as being blocked by robots.txt.

I guess this is a case of google not eating their own dog-food. I also hope this does not count against us, and it also is not great having only a partial match there. Especially if clients have a look. Maybe this is the first move to phase out google fonts like they did with reader ;-)

I witnessed a similar act last month, a clients site suddenly started to loose positions with very little competition, like you i went on the hunt for problems, used the fetch as google bot and saw the styling wasnt rendering………BUT the difference between what you discovered was that this was caused by WP Total Cache merging/minifying the css and JS files , resulting in Media Queries not working as they should on the mobile responsive side.

So viewed on a normal desktop the site was fine, but viewed on a tablet or phone it was missing its styling and JS.

Rather than fixing the issue to test i simply disabled the minify part of WP Total Cache , once done i fetched as Google bot again and told google to recrawl all linked pages and a day later positions were back to normal

Adam, that sounds like my issue. I am also using W3 on my client site… I’ll have to look at that specific option and see if it helps. It only happens occasionally and on desktop. Once I refresh, I am good to go — just a pain to do it every other time.

Yes i agree its intermittent and generally clears after a “clear all cache” from W3 , i saw a small coincidence that it happened every time there was a server update, apache restart or something related which i would assume would render the old saved cache “out of date” in a sense. Ive since opted to change the minify settings on W3 so it doesnt currently process those functions. At least until i get to the point where rebuild the theme.

Thanks for sharing! I’ve encountered this on one of my site… how do you go about lifting the “block”?

Isn’t is early yet to say for sure that they’ve recovered? There was previously a positive fluctuation (5/22), but it went right back down. Isn’t it possible this is another fluctuation, and there search visibility will go down next week? Just a thought.

Good points, could it been that google implemented something to detect if people were working around “Top Heavy” algo update (targeting too many ads) by blocking CSS and JavaScript? If So they added it at the same time of Panda 4 and PayDayLoans to make it harder for SEO’s to realize what was going on.

Anyway this doesn’t seem related to Panda 4 – at all -specially because of the quick return. Good findings you made here, but for the wrong google algo update.

It’s that old situation of Correlation vs Causation. But no one can take you the credits for this great finding.

In my opinion it’s not Panda. Dates don’t match (1st drop before Panda’s release) and to recover from Panda you need an algo refresh beside fixing the site.

It’s interesting, anyway. Surely Google keeps in count layout (remember top heavy algo) and this strange traffic trend can’t be tied to anything manual.

Looking forward for more details.

My thoughts exactly.

I wouldn’t jump to (too) early conclusions. That being said it would be great to stay on top of this and monitor future changes and (maybe) try to block CSS & JS again to see if it’s realy the trigger.

Cheers

Pascal

Yes, I agree. I have 3 blogs and am helping my friends to maintain theirs, a total of 7 blogs. All on different server, themes and configuration.

All three of my blogs gained traffic while one of my friends lost traffic. My blogs has the same problem as highlighted by Yoast, blocked CSS and JS, while my friend’s blog doesn’t have this issue when i performed the ‘Fetch and Render’ in webmaster.