Google BERT: A better understanding of complex queries

By announcing it as the “biggest change of the last five years” and “one that will impact one in ten searches”, Google sure turned some heads with an inconspicuous name: BERT. BERT is a Natural Language Processing (NLP) model that helps Google understand the language better in order to serve more relevant results. There are million-and-one articles online about this news, but we wanted to update you on this nonetheless. In this article, we’ll take a quick look at what BERT is and point you to several resources that’ll give you a broader understanding of what BERT does.

To start, the most important thing to keep in mind is that Google’s advice never changes when rolling out these updates to its algorithm. Keep producing quality content that fits your users’ goals and make your site as good as possible. So, we’re not going to present a silver bullet for optimizing for the BERT algorithm because there is none.

What is Google BERT?

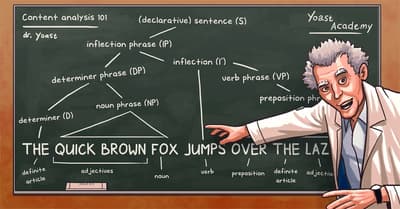

BERT is a neural network-based technique for natural language processing (NLP) that has been pre-trained on the Wikipedia corpus. The full acronym reads Bidirectional Encoder Representations from Transformers. That’s quite the mouthful. It’s a machine-learning algorithm that should lead to a better understanding of queries and content.

The most important thing you need to remember is that BERT uses the context and relations of all the words in a sentence, rather than one-by-one in order. So BERT can figure out the full context of a word by looking at the words that come before and after it. The bi-directional part of it makes BERT unique.

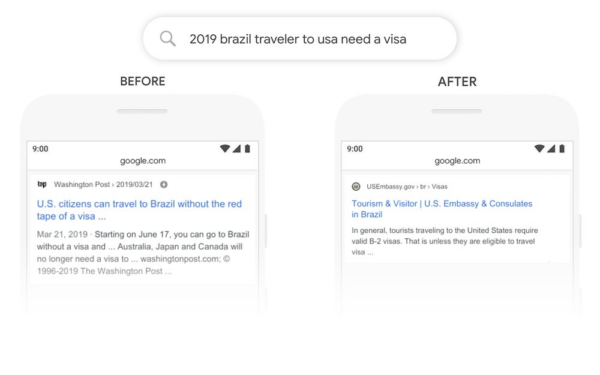

By applying this, Google can better understand the full gist of a query. Google published several example queries in the launch blog post. I won’t repeat them all but want to highlight one to give you an idea of how it works in search. For humans, the query [2019 brazil traveler to usa need a visa] obviously is about answering if a traveler from Brazil needs to have a visa for the USA in 2019. Computers have a hard time with that. Previously, Google would omit the word ‘to’ from the query, turning the meaning around. BERT takes everything in the sentence into account and thus figures out the true meaning.

As you can see from the example, BERT works best in more complex queries. It is not something that kicks in when you search from head terms, but rather the queries in the long tail. Still, Google says it will impact every one in ten searches. And even then, Google says that BERT will sometimes get it wrong. It’s not the end-all solution to language understanding.

Read more: Does Google understand questions and should I optimize for them? »

Where does Google apply BERT?

For ranking content, BERT is currently rolled out in the USA for the English language. Google will use the learnings of BERT to improve search in other languages as well. Today, BERT is used for featured snippets in all markets where these rich results appear. According to Google, this leads to much better results in those markets.

Useful resources

We’re not going into detail into what BERT does, talking about its impact on NLP and how it’s now being incorporated into search because we’re taking a different approach. If you want to understand how this works, you should read up on the research. Luckily, there are plenty of readable articles to be found on this subject.

- The original BERT paper (pdf) has everything you need to figure out how BERT works exactly. Unfortunately, it is very scholarly and most people need some ‘translation’. Luckily, DataScienceToday dissected the original BERT paper and turned it into readable learnings: Paper Dissected: “BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding” Explained.

- Rani Horev’s article BERT Explained: State of the art language model for NLP also gives a great analysis of the original Google research paper.

- Dawn Anderson is one of the most interesting SEOs to follow in regards to this subject. Check out her epic deep-dive into BERT and slide deck called Google BERT and Family and the Natural Language Understanding Leaderboard Race.

- Need a primer on NLP, the technology computers use to understand human language? Here’s a six-minute read: an introduction to Natural Language Processing).

- And, since Google open-sourced BERT, you can find the whole thing on GitHub. Why not run your own models and experiments?

- Plus, a great article by AJ Kohn — from last year, but very relevant — on how to analyze algorithmic changes in the age of embeddings.

- Last but not least, an article from Wired author John Pavlus called Computers are learning to read, but they’re still not so smart.

This should give you a solid understanding of what is going on in the rapidly developing world of language understanding.

Google’s latest update: BERT

The most important takeaway from this BERT update is that Google is yet again becoming closer to understanding the language on a human level. For rankings, it will mean that it will present results that are a better fit to that query and that can only be a good thing.

There’s no optimizing for BERT other than the work you are already doing: produce relevant content of excellent quality. Need help writing awesome content? We have in-depth SEO copywriting training that shows you the ropes.

Keep reading: Google’s MUM understands what you need: 1000x more powerful than BERT »

Great article. I have read that BERT does not affect the traffic of a website, but few of my client traffic is gone down after BERT algorithm launched.

Finally, Bert is here! I was hoping that Google was going in that direction and they did. Until Oct 29 the pageviews were around 12.000 per day. On Oct 31, they went to 35.000 and yesterday they were 76.000.

I’m smiling all day.

“So, we’re not going to present a silver bullet for optimizing for the BERT algorithm because there is none”. That’s not true, mister, I’ve been optimizing for Bert for the past 2 years without knowing, but now I know what Bert likes!

That’s interesting! Thank you Okoth!

Could you please may be share some of your optimizing tips you’ve been doing in the past 2 years? That would be appreciated a lot!

is BERT helpful for older websites or content?

Wow!!! the bug will catch!

Hopefully it will help us out and eliminate black hat.

Great news.

You are so right to remind each of us to continue delivering quality content. That’s the bottom line, and here’s why.

Google will continue with their agenda, and that’s fine. However, attempting to stay in lock step with their agenda causes chaos, exhaustion, and frustration.

I follow Yoast regarding SEO structure and have been quite satisfied with the results. I also appreciate these updates about SEO strategies and the ongoings with search.

The bottom line is to continue marketing online and offline in ways that reach and convince your audience. For me, Google can unveil whatever they wish. I’ll keep practicing solid SEO. Much thanks to Yoast for tools that help me with my goals.