Why your tests aren’t scientific

I read a lot of articles about A/B tests and I keep being surprised by the differences in testing that I see. I think it’s safe to say: most conversion rate optimization testing is not scientific. It will simply take up too much space to explain what I mean exactly by being scientific, but I’ll publish a post on that next week, together with Marieke.

I’ll be very blunt throughout this post, but don’t get me wrong. A/B testing is an amazing way to control for a lot of things normal scientific experiments wouldn’t be able to control for. It’s just that most people make interpretations and draw conclusions from the results of these A/B tests, that make no sense whatsoever.

Not enough data

The first one is rather simple, but still a more common mistake than I could ever have imagined. When running an A/B test, or any kind of test for that matter, you need enough data to actually be able to conclude anything. What people seem to be forgetting is that A/B tests are based on samples. When I use Google, samples will be defined as follows:

a small part or quantity intended to show what the whole is like

For A/B testing on websites, this means you take a small part of your site’s visitors, and start to generalize from that. So obviously, your sample needs to be big enough to actually draw meaningful conclusions from it. Because it’s impossible to distinguish any differences if your sample isn’t big enough.

Having too small a sample would be a problem with your Power. The power is a scientific term, which means the probability that your hypothesis is actually true. It depends on a number of things, but increasing your sample size is the easiest way to make your power higher.

Run tests full weeks

However, your sample size and power can be through the roof, it all doesn’t matter if your sample isn’t representative. What this means is that your sample needs to logically resemble all your visitors. By doing this, you’ll be able to generalize your findings to your entire population of visitors.

And this is another issue I’ve encountered several times: a lot of people never leave their tests running for full weeks (of 7 days). I’ve already said in one of my earlier posts, that people’s online behavior differs every day. So if you don’t run your tests full weeks, you will have tested some days more often than others. And this will make it harder to generalize from your sample to your entire population. It’s just another variable you’d have to correct for, while preventing it is so easy.

Comparisons

The duration of your tests becomes even more important when you’re comparing two variations against each other. If you’re not using a multivariate test, but want to test using multiple consecutive A/B tests, you need to test these variations for the same amount of time. I don’t care how much traffic you’ve gotten on each variation; your comparison is going to be distorted if you don’t.

I came across a relatively old post by ContentVerve last week (which is sadly no longer online), because someone mentioned it in Michiel’s last post. Now, first of all, they’re not running their tests full weeks. There’s just no excuse for that, especially if you’re going to compare tests. On top of that, they are actually comparing tests, but they’re not running their tests evenly long. Their tests ran for 9, 12, 12 and 15 days. I’m not saying evening this would change the result. All I’m saying is that it’s not scientific. At all.

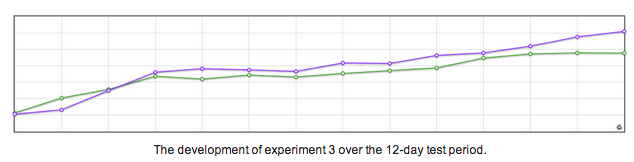

Now I’m not against ContentVerve, and even this post makes a few interesting points. But I don’t trust their data or tests. There’s one graph in there that specifically worked me up:

Now this is the picture they give the readers, right after they said this was the winning variation with a 19.47% increase in signups. To be honest, all I’m seeing is two very similar variations, of which one has had a peak for 2 days. After that peak, they stopped the test. By just looking at this graph, you have to ask yourself: is this effect we’ve found really the effect of our variation?

Data pollution

That last question is always a hard question to answer. The trouble of running tests on a website, especially big sites, is that there are a lot of things “polluting” your data. There are things going on on your website; you’re changing and tweaking things, you’re blogging, you’re being active on social media. These are all things that can and will influence your data. You’re getting more visitors, maybe even more visitors willing to subscribe or buy something.

We’ll just have to live with this, obviously, but it’s still very important to know and understand it. To get ‘clean’ results you’d have to run your test for a good few weeks at least, and don’t do anything that could directly or indirectly influence your data. For anyone running a business, this is next to impossible.

So don’t fool yourself. Don’t ever think the results of your tests are actual facts. And this is even more true if your results just happened to spike on 2 consecutive days.

Interpretations

One of the things that even angered me somewhat is the following part of the ContentVerve article:

My hypothesis is that – although the messaging revolves around assuring prospects that they won’t be spammed – the word spam itself give rise to anxiety in the mind of the prospects. Therefore, the word should be avoided in close proximity to the form.

This is simply impossible. A hypothesis is defined, once again by Google, as “a supposition or proposed explanation made on the basis of limited evidence as a starting point for further investigation.” The hypothesis by ContentVerve is in no way made on the basis of any evidence. Let alone the fact he won’t ever pursue further investigation into the matter. With all due respect, this isn’t a hypothesis: it’s a brainfart. And to say you should avoid doing anything based on a brainfart is, well, silly.

This is a very common mistake among conversion rate optimizers. I joined this webinar by Chris Goward, in which he said (14 minutes in), and I quote:

“It turns out that in the wrong context, those step indicators can actually create anxiety, you know, when it’s a minimal investment transaction, people may not understand why they need to go through three steps just to sign in.”

And then I left. This is even worse, because he’s not even calling it a hypothesis. He’s calling it fact. People are just too keen on getting a behavioural explanation and label it. I’m a behavioural scientist, and let me tell you; in studies conducted purely online, this is just impossible.

So keep to your game and don’t start talking about things you know next to nothing about. I’ve actually learned for this kind of stuff and even I’m not kidding myself I understand these processes. You can’t generalize the findings of your test beyond anything of what your test is measuring. You just can’t know, unless you have a neuroscience lab in your backyard.

Significance is not significant

Here’s what I fear the people at ContentVerve have done as well: they left their test running until their tool said the difference was ‘significant’. Simply put: if the conversions of their test variation would have dropped on day 13, their result would no longer be significant. This shows how dangerous it can be to test just until something is significant.

These conversion tools are aptly called ‘tools’. You can compare them to a hammer; you’ll use the hammer to get some nails in a piece of wood, but you won’t actually have the hammer do all the work for you, right? You still want the control, to be sure the nails will be hit as deeply as you want, and on the spot that you want. It’s the same with conversion tools; they’re tools you can use to reach a desired outcome, but you shouldn’t let yourself be led by them.

I can hear you think right now: “Then why is it actually working for me? I did make more money/get more subscriptions after the test!” Sure, it can work. You might even make more money from it. But the fact of the matter is, in the long run, your tests will be far more valuable if you do them scientifically. You’ll be able to predict more and with more precision. And your generalizations will actually make sense.

Conclusion

It all boils down to these simple and actionable points:

- Have a decent Power, among others by running your tests for at least a week (preferably much more);

- Make your sample representative, among others by running your tests full weeks;

- Only compare tests that have the same duration;

- Don’t think your test gives you any grounds to ‘explain’ the results with psychological processes;

- Check your significance calculations.

So please, make your testing a science. Conversion rate optimization isn’t just some random testing, it’s a science. A science that can lead to (increased) viability for your company. Or do you disagree?

Again it all boils down to numbers. If you have a website that doesn’t get the traffic numbers, your tests could run for years. If you want to get some solid numbers and feedback on what is working for your website, you have to get traffic and let the data speak for itself.

SEO tests are hard because you really need long periods of time to test stuff, and then Google goes and changes everything again. At last count I had over 20 websites that I own that I experiment with – never with a client website, always with my own domains on websites that don’t matter much. It’s amazing what you can discover. Learn by doing! Cheers, Thijs

Wow what an interesting read Thijs, good enough to lure all people to in with the need to respond :-) but enjoy your honeymoon man… the comments can wait for a week, your once in a lifetime trip can not!

Don’t reply… enjoy the trip

Dennis

Will this plugin be whitelabel or it will have all the marketing around it like the free version?

Thanks

Davys

Excellent advice… testing requires patience. I’ve noticed its easy to think something is failing when you don’t have enough data. There’s a ton of variables with response and how its affected. Great to see an article talking about this.

One request – it would be great to see you post a case study on how formal your own AB testing is. Could learn from that…

Thijs,

In some of your critique and comments you seem to forget that most splittest cases are not designed to validate the principles behind the winning variant. They are simply designed to find the winner and improve the business.

I agree, then, that it is unscientific to conclude on psychological principles behind the winners in this way. You can extract principles, though, as Michael and Chris have done and validate these in further tests. While this will still not prove anything with scientific validity, it does indicate which principles have the greatest success.

I believe people like Michael and Chris who have 100s of tests under their belts have developed a kind of unconscious intuition toward these underlying principles. By sharing their – yes, unscientific – principles, they have helped many companies improve their websites and earn more money.

The philosophical argument of scientific vs unscientific methods is lost on me. These guys improve businesses and makes the web a better place for the rest of us. I don’t care if they use unproven principles or mindsets. They do great work and give their clients return on their investment. And they don’t claim to do so in the name of science. Instead they do it in the name of their clients.

I’m trying to apply A/B Tests in my currents projects. Thank again for providing us “helpscout.net”. I still had some problems in the definition of good hypotheses

The best difficulty is to be patient. With A:B test, it’s not really easy when you want things work immediatly.

I’m agree with you; But How can we exactly chose the good metric for sample?

I would say it is a little heuristic this data, which could mean that it is not really a science

HI Joast,

I could see a newsletter signup at the right corner bottom. I love that look and feel. I want to add the similar one for my website. What is the plug-in name. I would be very great full if you can provide details about that.

Thanks,

Krishna

Your posts are very useful for the modern SEO trends. Thanks!!

What’s up Thijs – Michael from ContentVerve.com here ;-)

I’m glad I could inspire you to write this post! It gives food for thought, and I appreciate that. Because, just like every one else in the CRO game, I’ve got tons to learn, and I’m learning new stuff every day – I’ve certainly learned a lot since I wrote the post you’re referring to here (not least about testing methodology).

It really seems like you know what you are talking about, and that you have a lot of experience with scientific A/B testing. I’d love to get take-aways from some of your case studies – your post here has given me high expectations ;-) Could you share some of your case studies, maybe post some links in the comments or in the article? Preferably studies where you disclose full data and methodology. That would be awesome – thanks!

In the comments you wrote, “I felt treated in a less than gentlemanly fashion when they went and spouted all these behavioural claims to their tests. That is my home turf. It’s my ground, and I dare say I know a lot about it. And when someone comes in and bluntly claims to understand the human psyche, well, yes, I get pissed off.”

I honestly don’t think that I made any blunt claims in the post – and certainly not any blunt claims that I understand the human psyche. In fact, all the way through the post, I used words and phrases like “I believe”, “it would seem”, “can”, “could”, “points to” and “suggests”. These words are commonly used to convey the fact that these are observations and interpretations – not undeniable facts set in stone.

Moreover, the only place where I use the word “should” is in the hypothesis. And however stupid you find my hypothesis, I think we can both agree that a hypothesis and a conclusion are two different things ;-)

Moreover, it seems that you do agree with some of the observations Chris and I have made. In the comments you wrote “And let me admit, there probably is some kind of anxiety effect in all this.” Being a highly educated and experienced behavioral scientist, your statement should lend some credence to our observations.

In my perspective, I performed a series of tests, disclosed the data, made observations and published it all for others to read. People can do with the information what they want – to my knowledge most readers use it as inspiration for further experimentation on their own websites. I’m not trying to tell anyone exactly what to do, or that I hold the eternal truth.

Anyways – nice chatting with you, I’m looking forward to learning from your case studies and scientific methodology!

– Michael

Hi Thijs,

I understand that you’re young. You’re fresh out of school and proud of your degree. You should be. It’s a worthwhile accomplishment!

My own education was in Biochemistry, Marketing and Business. That was quite some time ago and I learned, as I’m sure you eventually will, that the real learning begins when school ends. At least, it should.

I also observe that you’re eager to make a name for yourself in this industry quickly. It was only three months ago that you blogged about how my book had inspired you to test. As you said, it “really changed my idea of conversion and made it understandable and clear. But most of all it convinced me of the need for testing. Use the ideas and advice from those blogs and books as hypotheses. And then test them.”

(Here’s the article I’m referring to, which I happened to stumble across today: https://yoast.com/conversion-rate-optimization-hypothesise-test/)

Perhaps you think a fast way to get attention is to throw stones at industry leaders. Well, congratulations; you got me to comment on your blog! Only because you may be misleading many people through this large platform your brother has generously shared with you.

Thijs, for all your talk about large data samples and statistical power, you’ve managed to take one lone comment of mine out of context and blow it into a straw man to be your punching bag. If you’ve listened to more than the few minutes of any of my webinars, you’ll know that I repeatedly stress how little one knows without testing, and how a winning result can only give inferences (at best) about the “Why” behind it. Only through a series of carefully planned tests can one begin to develop theories that could be predictive.

In the comment you called out, I did not say that I know for a fact that steps indicators create anxiety. I was trying to say that they *could* be creating anxiety. That *may* be the reason for lower conversion rate. And, it would be interesting to test that in a variety of different scenarios to see if that hypothesis holds true.

Your vitriol is misguided.

Thijs, I’d advise you to take a breather and consider carefully before claiming experts are “talking about things [they] know next to nothing about.”

You may find yourself on the receiving end of similar observations.

I truly wish you the best on your journey.

Chris

Thanks for your reply.

I’m just going to ignore the ‘age and wisdom’ comment, but I do want to say this. Don’t think that I’m Joost’s young and little brother and this post just happened to land on here. We think about what we say. In fact, we have years of experience in scientific research in our office, and I’ve used it all to come to this post. If they disagreed on anything, or thought anything not thought out well enough, it wouldn’t be here. That actually happened to parts of my text.

About your book: that still stands true! It’s an amazing book and it did inspire me. In fact, I use it nearly on a daily basis at the moment. So don’t think I don’t know value when I see it.

So imagine my surprise when I heard the comment I quoted coming out of your mouth. Because you do always and everywhere tell people to test everything. But you didn’t here, and that completely threw me off. Especially on something so important and basal.

And on top of that, which is maybe even my main argument: this is not what you’re testing! I just do not understand why this whole behavioural causation is brought in by a lot of people, when that is just not what they’re testing. That is not scientific.

And yes, I know it’s an isolated mention by you, but I’ve seen it happen a lot already. And isolated or not, I think this is something to be really careful about. It very much seems like you weren’t careful in that webinar.

And the fact that you’re only responding to that isolated comment, kind of has you doing the same. I see you’re saying I’m misguiding people. I’m happy to learn: where am I misguiding them?

I’d love to be on the receiving end of someone saying I know nothing. It may sting for a while, but if they make a fair claim, once again, I’m happy to learn. And I mean that.

You say you “just do not understand why this whole behavioural causation is brought in by a lot of people.” That’s a good question to ask, because it’s important.

I knew that I wasn’t directly testing the anxiety of the audience. That’s why I use qualifiers like “may” and “could.” But, that doesn’t make them any less useful.

It’s an inference. When something in science can’t be directly measured (or where it isn’t practical to do so) an inference can be made as to the cause. Then, through further testing, that inference can be supported or contradicted. If that inference holds true over many tests and becomes predictive, then it’s useful, regardless of whether it’s been directly measured.

Here’s an easy-to-understand overview from a middle school science teacher: http://www.slideshare.net/mrmularella/observations-vs-inferences

And, here’s a little more detail on Wikipedia: http://en.wikipedia.org/wiki/Inference

(Note, in particular, where it says “Inference, in this sense, does not draw conclusions but opens new paths for inquiry”)

Now, you’ve raised another strange idea when you say, “And the fact that you’re only responding to that isolated comment, kind of has you doing the same.” I really don’t follow your logic here. I’m just responding to your accusation.

I’ve also run out of time to continue this dialogue. You’ll have to learn some of this on your own :-)

Hi,

I posted a reply to your comment, Thijs – it seems to not have appeared. Oh well. Let me know if it surfaces.

Thanks for the great insight. Split testing is something that I definitely need to work on as far as better tracking goes. In the past I tried to change too many things at once without having large enough sample data.

This led to me making too many decisions based on data that was likely insignificant. I probably could have increased earnings quite a bit more, had I done things correctly.

Thanks a lot! Beware though: testing can give you completely wrong ideas if your website doesn’t have enough traffic.

Another mistake I see clients making is running test that don’t “scream.”

Even if you get loads of traffic and run for full duration IF you don’t test dramatically different variables you’re test results may be too close to draw any statistically significant conclusions.

I have a client who insists on testing minor phrase changes in the middle of the copy INSTEAD of a completely different version of the sales copy OR an entirely different layout.

Very insightful post. Thanks

Thanks a lot. I do mostly agree with you. I’m not sure whether changes have to scream, but I do think it’ll have to be noticeable changes. These can still be small, if it’s a prominent element on your page.

One last point – most people don’t leave a stub running at the end of a test – or revisit old control creatives.

At Belron, we did this regularly to check performance, particularly if other activity was changing the traffic mix after the end of test. We would leave a 5 or 10% stub going to the old design to keep an eye on control conversion, even after end of test was complete. We also revisited earlier control variants to ensure that our historical interpretation was still valid. Most people quote a 12.5% lift they got in January and walk around in July still telling the same story.

The truth is that unless you’re continually improving a site, that lift you got months ago may have vanished. Traffic changes, market changes, customer changes, product, website – all these may conspire to make your test result no more than confetti in the wind.

A valid point. And kind of linked to your seasonal variations, maybe even a sort of maturation.

But again, if I would’ve mentioned all this in one post, no one would’ve gotten to the end ;)

On my projects I often don’t have very much data to work with, and there is a cool method to help: bootstrapping.

Here is a link from Rutgers – Bootstrap: A Statistical Method: http://www.stat.rutgers.edu/home/mxie/rcpapers/bootstrap.pdf

Hi,

Disclaimer here – I’ve done a fair few tests

Firstly, don’t exhort people to do tests on weekly cycles unless that pattern exists. If you’re site, is a payday loan company, you have different cycles.

Seasonal variations also come into play as of course does the entire volatility and mix of traffic coming to your site. Turn off PPC budget and see what happens to your test results, There are a huge number of things that can so easily bias tests – you’re right that people need to test whole cycles and not ‘self stop’ or ‘self bias’ tests. I set out the cycle size before I begin testing and always test at least two of these.

THe other problem that your explanation doesn’t cover is about the frequency and distribution of the return visits to a site. If your cycle of purchasing spans 3 weeks and multiple repeat visits, testing 2 whole weeks won’t cut it. You’re deliberately removing part of the cumulative conversion rate for stuff you’re testing. And if the creative changed this repeat visit pattern for one of the samples, that will nicely bias your results. So you need to know the conversion path over several visits to know when you’re testing in a way that cuts valuable data.

On the subject of Michael’s tests – he is one of the more careful testers I know. Certainly in terms of the sample size and the length of test he aims for. Trust me when I say it’s people who do very poor quality tests that you should be singling out. He’s also one of the few people who publishes his data – many other sites, companies and organisations show partial data and it makes it hard for us to review it. However, this also invites scrutiny, which is good – if it’s directed in a way that helps the community. He’s not one of the bad guys in my book – and it feels wrong for you to be so harshly critical. There are better targets out there.

And on the anxiety bit, you are wrong. I’ve done plenty of tests with security flags – SSL indicators, security seals, payment logos, messaging and more. I also have spend thousands of hours both moderating user tests and viewing them as a client. I know that psychologically, what is being introduced here is anxiety from distraction.

You’ve taken someone with a goal in mind and then reminded them of a ‘bad thing’. In the case of security messages, if you use them at the wrong point in the process, you’re reminding people like this “Hey, you’re on the internet. Security is important. There are hackers and bad people out there. Worry about security. You know you’re about to give us your credit card?”.

This effect has been seen by usability testers and also by split testers. That’s why Chris mentions this and why Michael saw a similar effect when reminding people about spam at time of registration. As with all stuff, the personas, market, traffic are all different on your own site – so this has to be tested.

He’s raising a counter intuitive point with that test – which is that all us experts don’t know what works. It sometimes goes against all common sense (i.e. security seals would increase conversion) but real testing disproves our cherised notions.

One final poiint – most people don’t segment their tests. A and B are a composition of visitors that fluctuate in their response – new/returning, high value customers, visit source, referral. So many things are missed by not understanding the flow of the segment level performance in A/B test ‘average’ figures.

You raise good points about sample and ensuring traffic is free of bias, volatility and that it runs for non self selected periods. I’d also add browser compatibility issues to that list – as I’ve seen bias in a few tests where javascript clashes caused serious issues. Lastly, the session and cookie handling for recording data needs to be thoroughly thought out, especially if it doesn’t match the purchasing cycles you’re working to.

You have to be vigilant about all things: your own Psychology, the numbers involved, the traffic, the session handling, the QA, the checking, the watching and all the other sources of bias. It’s a very involved job.

I don’t think Chris and Michael deserve your rather offhand way of writing – it makes for a controversial read but hardly adds to the canon of knowledge here, when done in such a less than gentlemanly way.

In terms of your conclusion:

>>Have a decent Power, among others by running your tests >>for at least a week (preferably much more);

Not true – you’ll need to find your cycles and visitor patterns to conversion – paying note of cumulative conversion over time (repeat visits).

Make your sample representative, among others by running your tests full weeks;

Agreed about making it large enough and representative enough. Having a handle on your traffic mix and cycles is pretty important. It may not be full weeks that you test either – if you have different cycles. I’d always recommend at least two cycles – so you can compare them and see if they fluctuated in response. If they did, you may need to test longer or find out why.

>>Only compare tests that have the same duration;

Maybe – if you were comparing duplicate traffic patterns. I’m not sure what you mean here.

>>Don’t think your test gives you any grounds to ‘explain’ the >>results with psychological processes;

Agreed – but we can with UX work and other inspection tools provide evidence to triangulate these things. Silly people take a test as a conclusion to ‘do this thing’ on the rest of the planet. I only take it as a test rather than a stone tablet commandment. You need to work out your own customer fears, worries and barriers against your own test, site context and team. It’s not an instruction – just a test that someone else ran. Take from it what you like.

>>Check your significance calculations.

Agreed. Also – most people make the mistake of not understanding the error rate involved in tests. Most testing tools show a ‘line’ graph when it should be a ‘fan’ graph instead. The line is the centre of a region of probability and should be visualised as such. Many marketers mistake this for a precise line or performance point. When the error ranges overlap, either A or B could possibly be the winner.

Just thinking about this some more:

Did you actually set up a test area to test anxiety? Or do anything to measure it? Because if not, you’re doing exactly the same as both Michael and Chris… You’re hypothesizing about something you’re not even testing.

I see a pattern here, to be honest…

Hey – perhaps I see a pattern too. The irony is rather deep as you’re accusing Michael et al. of presenting an axiomatic truth. It isn’t – it’s a test result. The fact is that the conclusions of your post would lead people to doing poor testing – as it isn’t accurate. I shouldn’t have to cover that all again.

Michael and Chris aren’t trying to present these as truths and neither am I suggesting I have MRI scans of anxiety. You’re missing the point – there is plenty of evidence to suggest that reminding people of stuff that worries them, is a barrier to transacting or could cause them to think about negative aspects of the purchase. I’ve seen it in plenty of tests, explored it with people and watched their facial gestures when hitting something that worried them. No – it isn’t scientific proof but there’s nothing invalid with using usability techniques to get great insight. I don’t think anyone would suggest that there’s nothing useful to learn from testing customers with products and listening closely.

It’s kinda creepy that you mention that I know Michael and infer something from that (I actually have met him twice, at two conferences fyi) – I’m pretty open about who I meet and talk to at events. It doesn’t make any difference to me but it seems a bit stalkery for you to bring this up.

Let me hammer home the thing you’re missing here. Nobody is trying to present truths here. They are test results and I don’t think either of the authors you mention are making claims that you should just go and do this on a website without test or measurement. By publishing test results, people can get great ideas for test hypotheses they might want to try. It may not work at all for their customer mix so people are free to copy or do dumb things with them, as well as put them to good use in their own work.

And it’s nothing about being a nice guy – those are your words, not mine. I’m merely pointing out you’re taking a kinda controversial and factually flawed approach yourself. If you’d stop and consider it for a minute, you’d laugh at yourself for missing that.

You say that their articles were ‘ungentlemanly’ themselves and that’s what riled you up? Well your article riled me up – because it felt like a rant and not a considered piece of work. I did take a long time posting my original response and also thinking about this one. Certainly more time than you used to write the post in the first place.

It’s ok if you want to keep feeling angry about all these people being wrong. Some of us are trying to educate people about how to do tests better, not harangue people involved in that process of sharing and improving.

I feel enervated writing this, knowing you’re not listening and feeling sorry for that being the case. Enjoy the beer on your own at the bar.

And: “No – it isn’t scientific proof but there’s nothing invalid with using usability techniques to get great insight.”

You make me laugh…

Well, isn’t this nice… For someone who thinks I’m being ungentlemanly, you certainly have a nice way of “making your point”.

Let me first say, you have no idea how long it took me to write this post, so don’t even go there :) The fact that you wrote this response the same day as I posted my post, is proof my post took a lot longer to write.

And once again, I’ll ask you to bring that literature of anxiety forth to me. Because I’m not seeing anything of it anywhere near any of those posts. And that’s all I’m asking. Proof of what you’re saying.

Just like you’re telling me my post will lead people to do incorrect testing, because I’m wrong…

I’m sorry if my remark came off as creepy, because I certainly have no intention of stalking you, or anyone else. I must’ve misjudged your relationship with Michael. Maybe you react the way you do, because you’re doing the same exact things I’m criticizing.

There’s a lot of “you’re wrong” in this reply, but I don’t see you explaining yourself where I’m actually wrong, apart from your first reply, that is. And that one I have my own reply to. I’ll just have to wait until someone comes in and corrects me I guess…

Wow, you’re the winner on longest reply ever, lol!

Let me try to be as meticulous as you were in trying to bring down my arguments ;)

Weekly or cycles, it’s all fine. As I’ve said in the beginning of this post, expect another post in the coming week explaining in detail what we think is scientific. The most important lesson, however, is that people should actually be thinking about what they’re doing.

Yes, there are a lot of arguments missing from my post, and most of them I left out intentionally. I can go on forever, and I will, but just not in one post. This post is stretching it already for most people, and I want to be sure I’m understood by most readers. Your additions are duly noted and helpful though.

You seem to know Michael a lot better than me, and I actually know you met up with him on the Conversion Summit last week. Now, I’m not that biased. I don’t know him, although I’m sure he’s a nice guy. All I know is I read his post, and saw a ton of flaws in it, as I’m sure people, like yourself, will see in my post. Saying there are better targets is a non-argument to me. I’m not saying he’s a bad guy, which I’ve also mentioned in my post. All I’m still left wondering is this: if he’s such a careful tester, why don’t his posts show me that?

And now on to the anxiety bit. I love this, because you’re actually telling me I’m wrong. Let me tell you, you’re wrong :) So you’ve done a lot of user testing, moderating them, viewing clients, etc. So how, in this process of watching people, do you actually KNOW people are experiencing anxiety? You can see this? Because honestly, that’s a skill anyone would like to have. I know I’m being a bitch now, but that’s because this is EXACTLY the point that I’m trying to make. Just because you think you see something, doesn’t mean it’s there. In fact, you’re so convinced of this thing of anxiety, it’s probably hard for you to see otherwise. And that’s a statement I can actually back up with decent scientific literature. Did you even hand them a questionnaire about their feelings? Seriously, you’re really taking a backstab at the whole idea of behavioural and psychological research. Which is why I get so rowed up.

And even if you did have all the scientific and non-self-interpreted data from users, how do you know that the data from your studies, are transferable to completely different studies? It’s a completely different context, a completely different test. Chris’s test was about progress bars, yours was about security signs. They’re on different websites, with a completely different dataset. How are your findings relevant to Chris’s experiment? How are you going to control for that?

And let me admit, there probably is some kind of anxiety effect in all this. What bothers me, is that people just can’t go saying this and that is happening because of anxiety, without any scientific backup.

You’re saying neither Chris or Michael deserves what I put up here. But then again, it’s all up here, in this fashion, because I care about this stuff. And I felt treated in a less than gentlemanly fashion when they went and spouted all these behavioural claims to their tests. That is my home turf. It’s my ground, and I dare say I know a lot about it. And when someone comes in and bluntly claims to understand the human psyche, well, yes, I get pissed off. If they’re all doing this so scientifically, please give me the data to show for it.

And to be honest, I’d like for everyone to be as critical of me as I’m of everyone else. That’s the fastest and best way to learn in my opinion.

I’m not sure why my point on Power wouldn’t be true. It’s a simple fact that the more data you have, the higher your power becomes… Not really sure what’s wrong in that?

About the comparisons: you bring up a good point, which our post of next week will address as well. Comparing tests held on completely different times is nonsense. But then again; isn’t that what Michael did? And comparisons still need to be held over the same duration of tests.

We actually seem to agree on most of what I’m saying, it seems. Apparently the most “appalling” part of my post was that I actually used a live post by someone who’s liked by a lot of people (n=1). Well, I can’t say I’m sorry, because in my eyes that post deserved it.

Once again, don’t get me wrong. I’m sure Michael, and Chris for that matter, is a nice guy. But being a nice guy doesn’t necessarily make you right. Being right makes you right. I’ll still have a beer with any one of you, because this is a discussion I just can’t get enough of, however riled up I get ;)

I totally agree. Accurately doing your splittests is waaay different than just splittesting without proper knowledge about statistics.

And jumping to conclusion too early does not only results in wasting your time (and maybe conversions) but in the longterm also a incorrect picture of conversion optimization.

Is sometimes wonder if that is sometimes the reason why some markteers don’t agree with each…

Well, I guess marketeers don’t agree with each other because it’s in their nature to think only they can be right ;)

And yes this is a joke, so not scientifically backed up, before anyone gets me on this one ;)

While I agree with you 100% on the note that these tests are frequently not scientific, including ContentVerve’s – your note about his hypothesis implies that he came to it at the end – but he arrived at that after the first of the 4 tests (with a small amount of data) and then proceeded to do more testing.

I would say the he fulfilled the requirements to call it a hypothesis.

Also – in regards to the quote by Chris Goward – by the text he is not stating this as fact. He says “people MAY NOT understand” – which designates it as an opinion, though he was very likely implying that it was fact by his tone of voice (I didn’t listen to the audio, so cannot know for sure).

Well, Ross, ContentVerve did not test whether it actually caused anxiety, did they? Because that would mean actually going to the test subjects and measuring their stress levels, maybe even some neurocognitive tests to see if they were anxious… So he didn’t fulfill anything to call it a hypothesis, because this hypothesis was nowhere near to what he was actually testing.

And regarding Chris Goward, he said: “It turns out that in the wrong context, those step indicators can actually create anxiety”. So he is actually calling it fact. And this is beside the point really, because he’s making behavioural claims that were totally unrelated to his tests.

And that’s my whole point really: don’t talk about stuff you’re not testing. Let alone giving these things as a cause for your results.

Would you recommend agains using a “Automatically disable losing variations?” functionality as well ?

Thanks for your reply! Yes, I’d advice against that as well. The tools that offer these options are basing the ‘losing variations’ on significance. And that significance doesn’t mean a whole lot, as I said in my post.