Indexed though blocked by robots.txt, what to do?

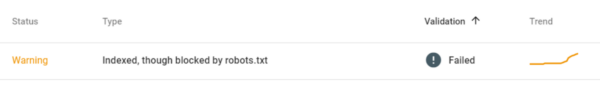

Do you see the following warning in Google Search Console: “Indexed, though blocked by robot.txt”? This means that Google indexed a URL even though it was blocked by your robots.txt file. Google shows a warning for these URLs because they’re not sure whether you want to have these URLs indexed. What do you do in this situation? And how can Yoast SEO help you fix this? Let’s find out!

How to fix the warning “Indexed, though blocked by robots.txt”

Google found links to URLs that were blocked by your robots.txt file. So, to fix this, you’ll need to go through those URLs and determine whether you want them indexed or not. Then, you’ll need to edit your robots.txt file accordingly and you can do that in Yoast SEO. Let’s go through the steps you’ll need to take.

- In Google Search Console, export the list of URLs.

Export the URLs from Google Search Console that are flagged as “Indexed, though blocked by robots.txt”.

- Go through the URLs and determine whether you want these URLs indexed or not.

Check which URLs you want search engines to index and which ones you don’t want search engines to access.

- Then, it’s time to edit your robots.txt file. To do that, log in to your WordPress site.

You’ll be in the WordPress Dashboard.

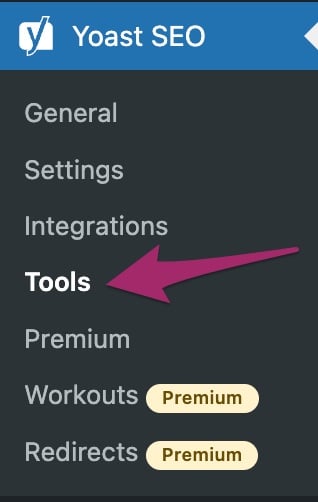

- In the admin menu, go to Yoast SEO > Tools.

In the admin menu on the left-hand side, click Yoast SEO. In the menu that appears, click Tools.

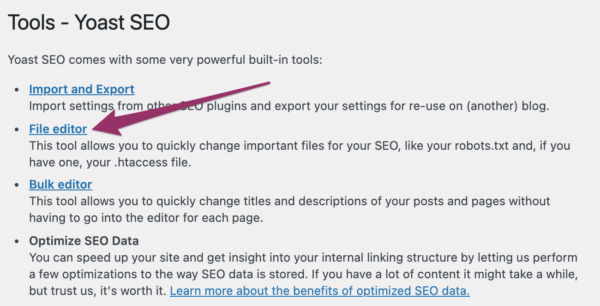

- In the Tools screen, click File editor.

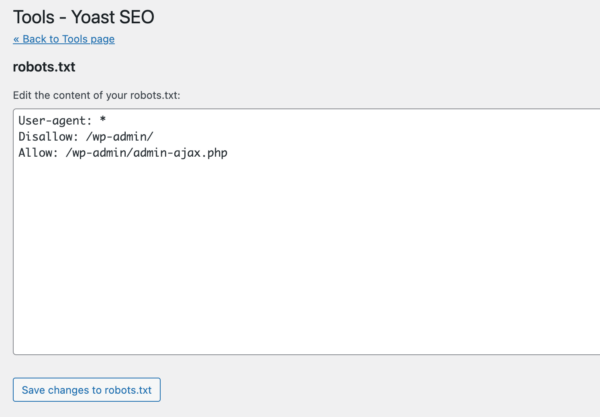

- In the File editor, edit your robots.txt file.

Update your robots.txt file to allow Google to access the URLs that you do want to have indexed and to disallow Google to access the URLs that you don’t want to have indexed. Read more about how to edit your robots.txt file. Or, read more about robots.txt in our Ultimate guide to robots.txt.

- Click Save changes to robots.txt to save your changes.

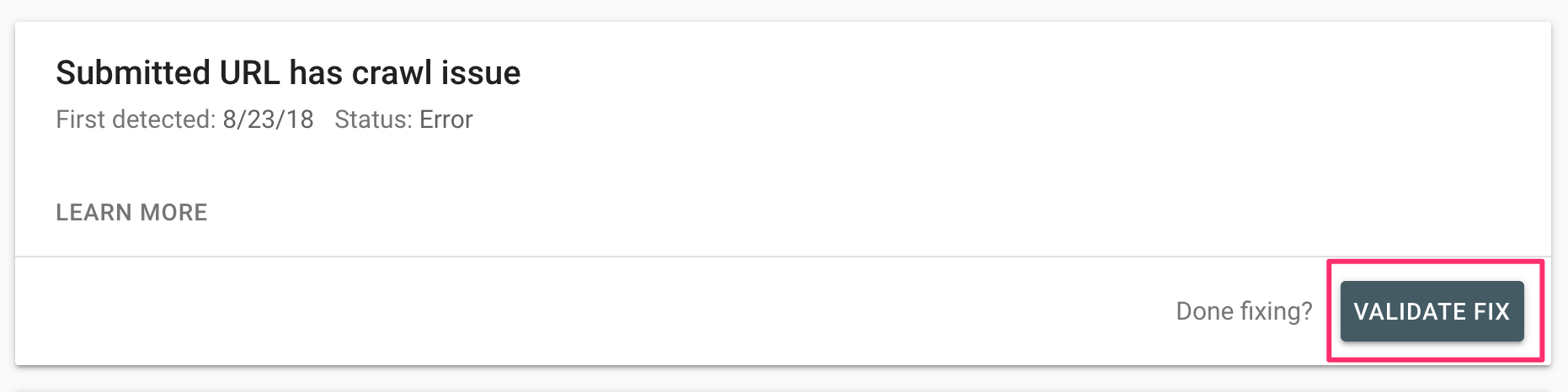

- Go back to Google Search Console and click Validate fix.

Go to the Index Coverage Report, and then to the page that has the issues. There, click the Validate fix button. Then, you’ll send a request to Google to re-evaluate your robots.txt against your URLs.

Read more about robots.txt

Do you want to have more information on robots.txt? Check out the following articles:

- Ultimate guide to robots.txt

- Ultimate guide to the meta robots tag

- How to edit robots.txt through Yoast SEO